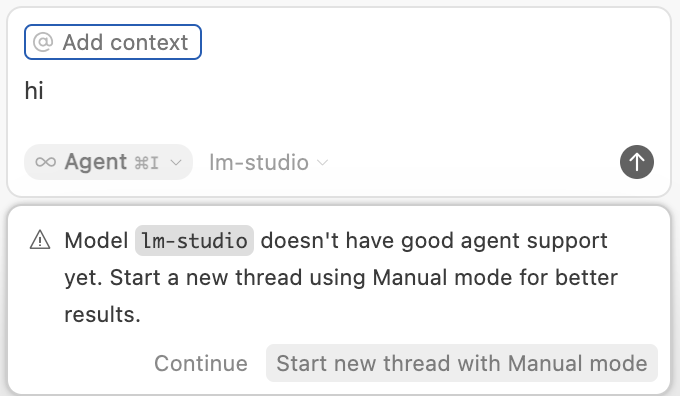

If you’re looking for a way to use local llm for cursor, see this comment as it has all the essence. If I replicate the contents, but for lm studio, the steps are as below.

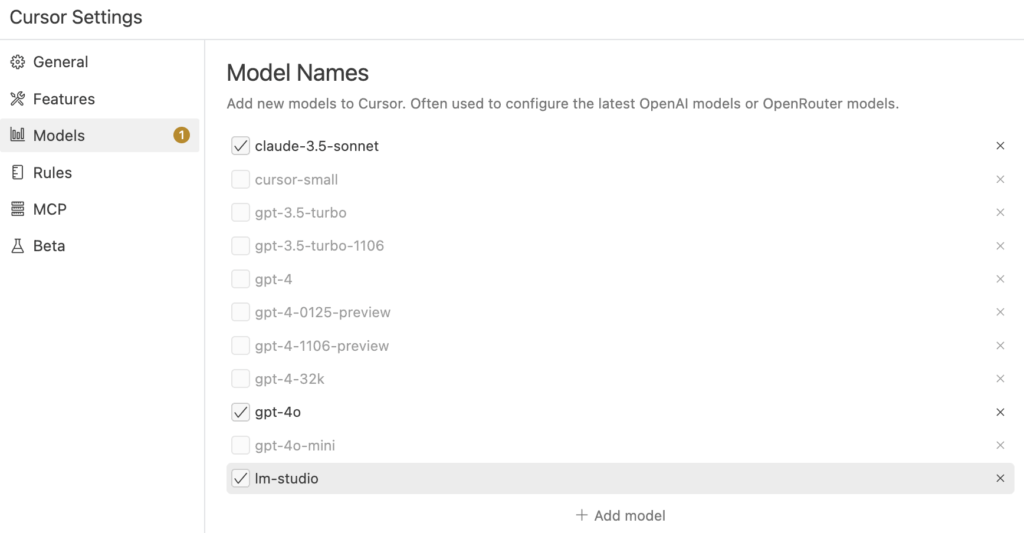

First, add a model name. I added ‘lm-studio’.

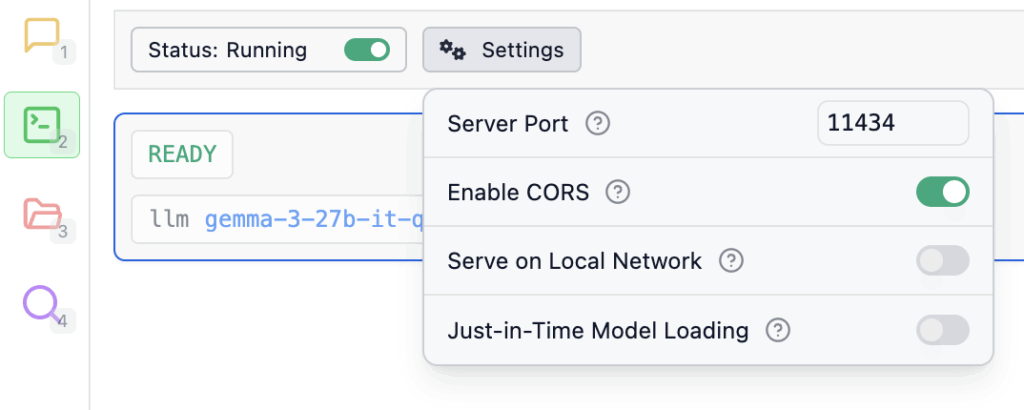

In lm studio, load a model of your choice, and go to developer tab. Under the settings, you need to update two: port number (if you want) and CORS (to allow).

Now, in the terminal, type in the below. This is a required step for two reasons: 1) cursor needs a port number to be 80, 2) it wants non local host. Esp. for #2, it gives “”Request failed with status code 403: Access to private networks is forbidden” without this.

Please visit https://serveo.net/ and read contents to understand that this step exposes your lm studio to the internet. I tried updating /etc/hosts and socat, but it didn’t work. Maybe cursor is checking resolved ip.

ssh -R 80:localhost:11434 serveo.net

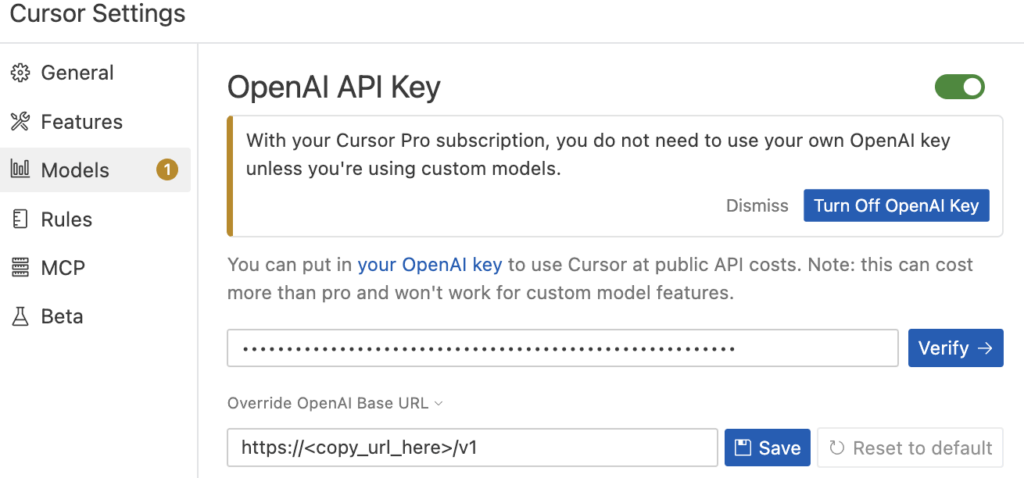

Above command will print a url. Copy it. Go to cursor model settings, fill in opan ai api key with an arbitrary string (it doesn’t need to be actual open ai api key). Add the copied url in the “Base URL”, but be sure to append “/v1” at the end. Save and Verify to turn on OpenAI API Key section.

That’s basically it! You’ll see lm-studio as one of the models.